Stability AI, the team behind the popular AI art tool Stable Diffusion, has announced the launch of its latest creation: StableLM, a suite of text-generating AI models designed to rival systems like OpenAI’s GPT-4 and ChatGPT. Available in “alpha” on GitHub and Hugging Face, StableLM can generate both code and text and has been trained on a custom dataset called The Pile, which Stability AI claims expands the size of the standard Pile by 3x.

According to Stability AI, its StableLM models can deliver high performance with appropriate training and demonstrate how small and efficient models can be. The models are expected to form the backbone of the digital economy, and the company is keen for everyone to have a voice in their design. (https://stability.ai/blog/stability-ai-launches-the-first-of-its-stablelm-suite-of-language-models)

The Pile dataset is a mix of internet-scraped text samples from websites including PubMed, StackExchange and Wikipedia. Stability AI claims to have created a custom training set for StableLM, but did not mention whether the models suffer from the same limitations as other language models, such as generating toxic responses to certain prompts and hallucinating facts.

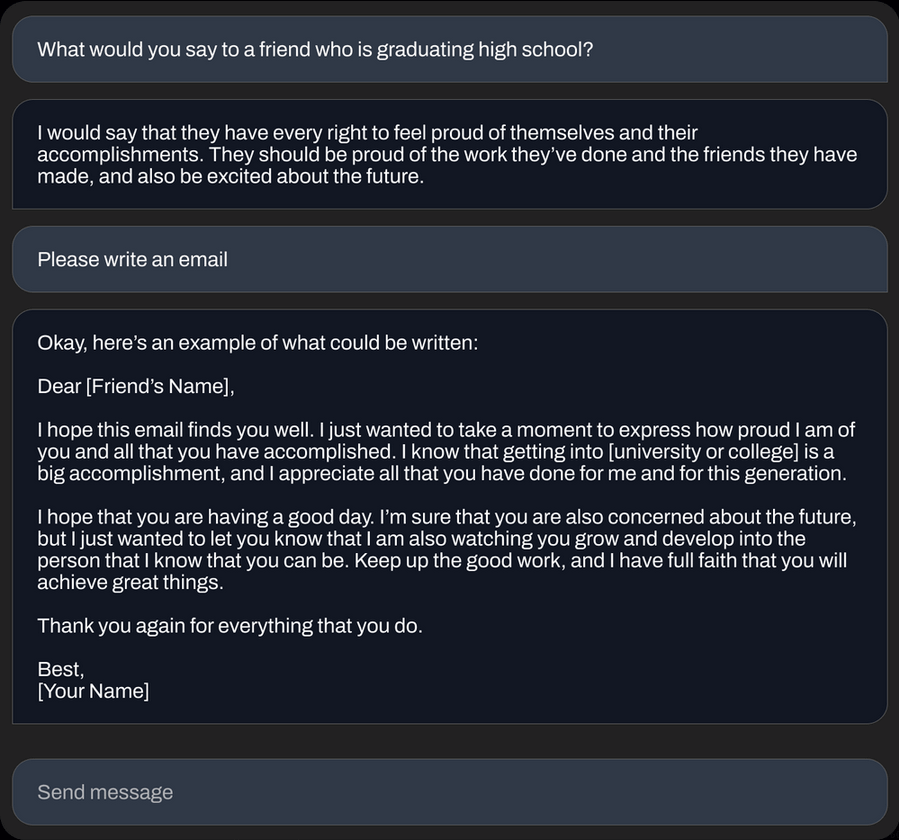

The models appear to be capable of handling a variety of tasks, particularly the fine-tuned versions included in the alpha release. Fine-tuned using a technique called Alpaca on open-source datasets, the StableLM models behave like ChatGPT, responding to instructions such as “write a cover letter for a software developer” or “write lyrics for an epic rap battle song.”

The launch of StableLM follows a trend of companies releasing open-source text-generating models, as businesses large and small vie for visibility in the generative AI space. The past year has seen the release of models by Meta, Nvidia, and independent groups like the Hugging Face-backed BigScience project. These models are roughly on par with private models such as GPT-4 and Anthropic’s Claude, which are only available through an API.

However, some researchers have expressed concern that open-source models like StableLM could be used for unsavory purposes like creating phishing emails or aiding malware attacks. Stability AI, on the other hand, argues that open-sourcing is the right approach for transparency and fostering trust. The company claims that researchers can verify performance, work on interpretability techniques, identify potential risks and help develop safeguards when given open, fine-grained access to models.

Despite this argument, it remains to be seen how StableLM will fare in the competitive generative AI space. Nevertheless, Stability AI has never shied away from controversy in the past, and the launch of StableLM suggests that the company is willing to take on the big players in the industry.