Graphics cards play a crucial role in deep learning and artificial intelligence. Their massively parallel architecture allows them to efficiently perform the large-scale mathematical calculations required for deep neural network training and inferencing. Selecting the right GPU can have a major impact on the performance of your AI applications, especially when it comes to local generative AI tools like Stable Diffusion. In this guide, we’ll explore the key factors to consider when choosing a GPU for AI and deep learning, and review some of the top options on the market today.

Key Factors for Deep Learning GPUs

When selecting a GPU for deep learning, here are some of the most important factors to consider:

Memory Capacity

Deep neural networks can have millions or even billions of parameters. To train and run these massive models, you need a GPU with ample memory capacity. At least 8GB is recommended, with 16GB or higher being ideal for more complex models. As these AI models advance, 8GB is becoming more and more inaccessible. This can be seen especially with the recent release of SDXL, as many people have run into issues when running it on 8GB GPUs like the RTX 3070.

Compute Capability

The number of cores in the GPU determines how fast it can perform parallel computations. For deep learning, aim for a minimum of 3,000 CUDA cores. The more cores, the faster the training.

Tensor Cores

Specialized cores designed specifically for deep learning matrix math. Tensor Cores can provide up to a 9x speedup for AI workloads compared to regular CUDA cores. NVIDIA GPUs from the Volta generation and beyond feature Tensor Cores. Tensor Cores are becoming a large selling point with the newer cards from NVIDIA, since they are so valuable when it comes to running AI applications.

Software Support

NVIDIA GPUs work seamlessly with all the major deep learning frameworks like TensorFlow and PyTorch. They also have optimized CUDA deep neural network libraries for activities like image classification, object detection, and language processing. With AMD cards, you will often have to go through hoops to get applications working correctly, and sometimes there will be very little platform support available for AMD GPUs.

Power Consumption

Higher performing GPUs require more power, so make sure your power supply can support the GPU you choose. Heat dissipation is also a consideration for multi-GPU configurations and power-hungry GPUs like the RTX 4090. These cards can run very hot, so make sure your PC’s cooling is up to scratch! While the RTX 4 series performs much better than the 3 series with AI and ML, the cards do tend to draw more power and therefore generate more heat.

Budget

Higher memory capacity, more CUDA cores, and specialized hardware like Tensor Cores come at a higher cost. Depending on your situation, you may wish to try and find a healthy balance in between the high-end and budget cards.

Future-Proofing

Future-proofing refers to ensuring your GPU will stand the test of time, and last at least several years down the line. The most important factor to consider here is most likely the dedicated VRAM (memory) on the GPU. While 8GB was considered adequate a year or two ago, it is becoming increasingly difficult to run AI models with 8GB GPUs. In order to future-proof your system, you’ll likely want to have at least 12GB VRAM at the very minimum.

Budget Deep Learning GPU Recommendations

Based on our findings, here are some of the best value GPUs for getting started with deep learning and AI:

- NVIDIA RTX 3060 – Boasts 12GB GDDR6 memory and 3,584 CUDA cores. One of the most popular entry-level choices for home AI projects. The 12GB VRAM is an advantage even over the Ti equivalent, though you do get less CUDA cores.

- NVIDIA RTX 3060 Ti – With 8GB GDDR6 memory and 4,864 CUDA cores, it provides great performance at an affordable price point. The Tensor Cores allow it to excel at AI workloads. The 8GB VRAM is a major limiting factor, however, therefore we only recommend this GPU if it’s one of the few options available to you.

- AMD Radeon RX 6700 XT – A cheaper AMD alternative with 12GB of memory and 2,560 stream processors. This is a good choice for deep learning on a tight budget, however, the lack of support for some AI frameworks might set you back.

- NVIDIA RTX 4070 – From NVIDIA’s latest 40 series GPUs, the RTX 4070 offers 12GB memory and 5,888 cores for improved performance over the 3060. If you can find this at a similar price to the 3060, it’s definitely worth the upgrade. You might also be interested in the RTX 4060, which is slightly cheaper but only offers 8GB of VRAM.

High-End Deep Learning GPU Recommendations

For more advanced users ready to invest in premium hardware, these GPUs offer some incredible AI capabilities, and will handle AI image generation even with powerful models like SDXL:

- NVIDIA RTX 4080 – A top-tier consumer GPU with 16GB GDDR6X memory and 9,728 CUDA cores providing elite performance. This GPU handles SDXL very well, generating 1024×1024 images in just a few seconds.

- NVIDIA RTX 4090 – The most powerful gaming GPU ever produced as of 2023, with 24GB GDDR6X memory and 16,384 CUDA cores. This is overkill for most home users, but does offer improved performance over the 4080, thanks to the massive amount of extra cores. This card is also likely to future-proof you for at least 5 years or longer.

- NVIDIA A6000 – A professional workstation GPU optimized for AI with 48GB of memory and 10,752 CUDA cores. Extremely expensive but offers ultimate performance for those without budget restrictions.

- NVIDIA H100 – Brand new data center GPU for 2022 using NVIDIA’s Hopper architecture. Up to 80GB HBM3 memory and insane 78 billion transistors. A new version with 120GB memory is expected soon. Not intended for consumers and extremely pricey!

Using GPUs for AI Image Generation

These GPUs will excel at running AI image generation tools like Stable Diffusion. With enough VRAM capacity and CUDA cores, you’ll be able to generate detailed AI images quickly:

- Opt for at least an RTX 3060 or RX 6700 XT to comfortably run Stable Diffusion for image generation up to 512×512 resolution.

- The RTX 4070 or RTX 4080 will allow faster generation of larger 1024×1024 images with Stable Diffusion and will be able to run SDXL models easily.

- For the highest quality 2048×2048 AI image generation, the RTX 4090 provides incredible performance, but also comes with a hefty price tag. Very useful if you find yourself often creating large batches of images.

- Make sure to use a GPU with at least 10GB of VRAM for generating high resolution AI images, otherwise you may run out of memory.

- Faster CUDA cores will drastically reduce AI image generation times. For example, the RTX 4090 can generate images over 5x quicker than an RTX 3060 in Stable Diffusion.

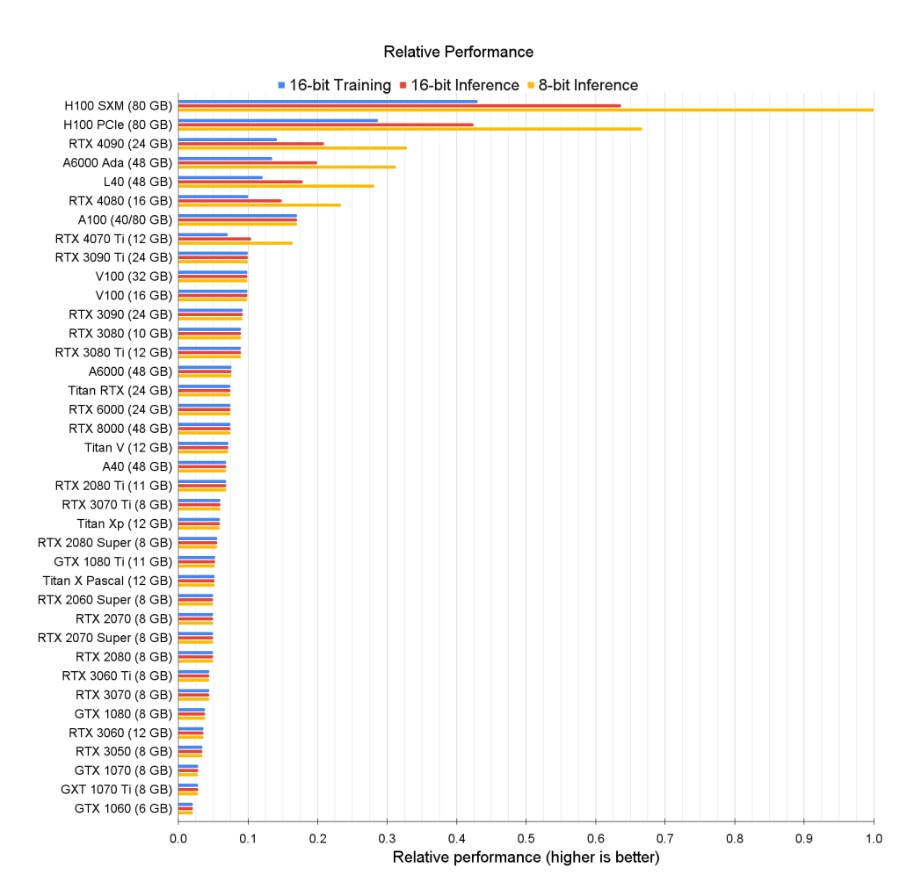

Below is a very useful chart showing the raw relative performance of GPUs in deep learning scenarios, created by Tim Dettmers.

FAQs

How much VRAM do I need for deep learning?

For most deep learning projects, 12GB of VRAM is recommended. This gives you room to work with large datasets and neural network architectures. 8GB can work for simpler and older models. High-end GPUs may have 24GB or even 48GB of memory for extreme cases, and these will handle pretty much all consumer-end AI models.

Do I need an expensive GPU for deep learning?

Budget GPUs under $500 like the RTX 3060 or RX 6700 XT are capable of running many deep learning models. High-end gaming GPUs with more VRAM and CUDA cores will train models faster and allow more complexity. Professional data center GPUs offer incredible power but at steep prices and are not often used for personal use.

Can I use multiple GPUs together for deep learning?

Using two or more GPUs together can significantly improve deep learning performance through parallelization. This multi-GPU configuration is called “Scalable Link Interface” (SLI) with NVIDIA cards. Scaling gets more complex so we’d recommend starting with one GPU first while learning. You can also run into compatibility issues when using SLI with certain models, as not many of them have been developed with multiple GPU systems in mind.

How difficult is it to get started with deep learning?

The barrier to entry for deep learning has come way down thanks to mature libraries like TensorFlow and PyTorch. You don’t need extensive programming skills or a PhD to start building and training models with readily available datasets. You should experiment with existing models before attempting to create your own, as this will familiarize you with how the models work (plus, you’ll likely pick up some valuable skills for later down the line!).

Where can I learn more about deep learning and AI?

There are many free online courses and educational resources for deep learning. If you’re interested in developing your AI and ML skills, we recommend checking out our AI Courses page. You can find some excellent educational courses offered by the likes of Google, Stanford University, DeepLearning.ai, IBM, and more. We feature beginner courses all the way up to advanced.

It’s also good practice to experiment with AI tools when you’re able to. Learning how AI models can be interacted with, along with their limitations, can prove really valuable when it comes to developing or training your own model.