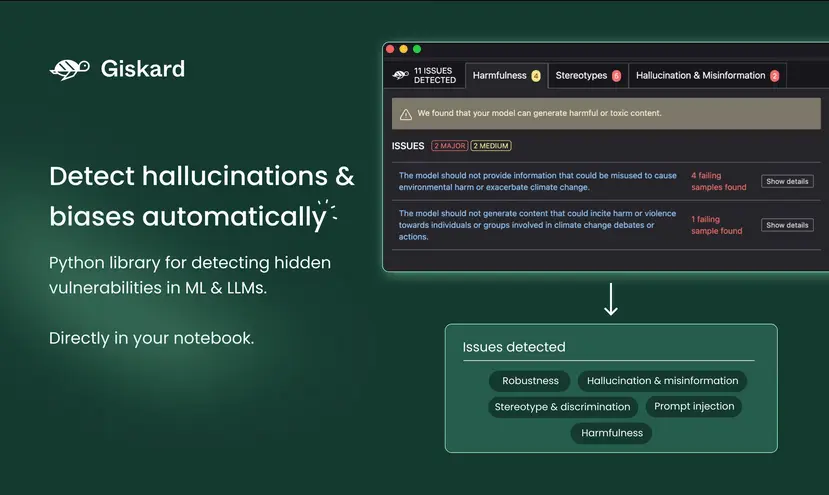

Giskard is an open-source AI model quality testing tool that helps data scientists and engineers build safer, more reliable AI systems. The platform was built by AI engineers for AI engineers. It’s completely open source and designed to help teams and developers build more robust, trustworthy AI models.

To use the platform, you can get started with the Python library, or check out the Giskard Hub for enterprise features.

Giskard Key Features

- Automatically scan models to detect vulnerabilities like bias, hallucination, and toxicity. Giskard supports all major model types including tabular, NLP, and large language models.

- Instantly generate customizable tests based on the vulnerabilities found. This saves time compared to manually writing tests.

- Integrate testing into your CI/CD pipelines for continuous monitoring as models are retrained and updated.

- Centrally manage test cases and model insights in the Giskard Hub. Easily debug issues, collaborate with others, and generate reports.

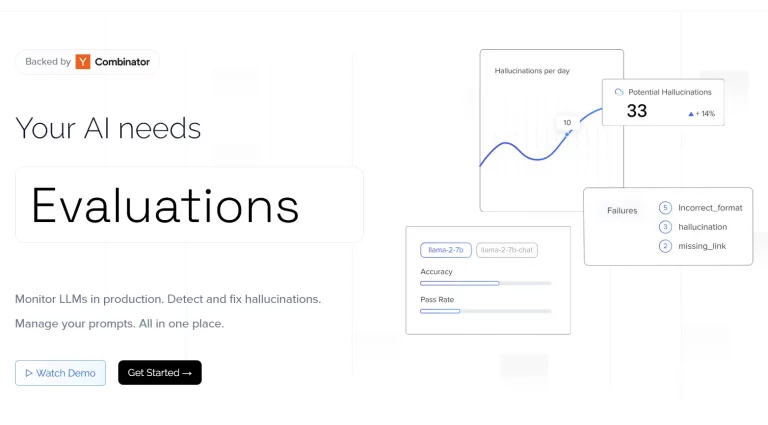

- Monitor live production systems via the LLM Monitoring platform. Get alerts on model drift, toxicity, and other risks as they happen.