Hugging Face NLP Course

The Hugging Face NLP Course is designed to advance and democratize artificial intelligence through open source and open science. It is entirely free and devoid of advertisements, allowing you to explore and learn natural language processing (NLP) with cutting-edge libraries and tools from the Hugging Face ecosystem.

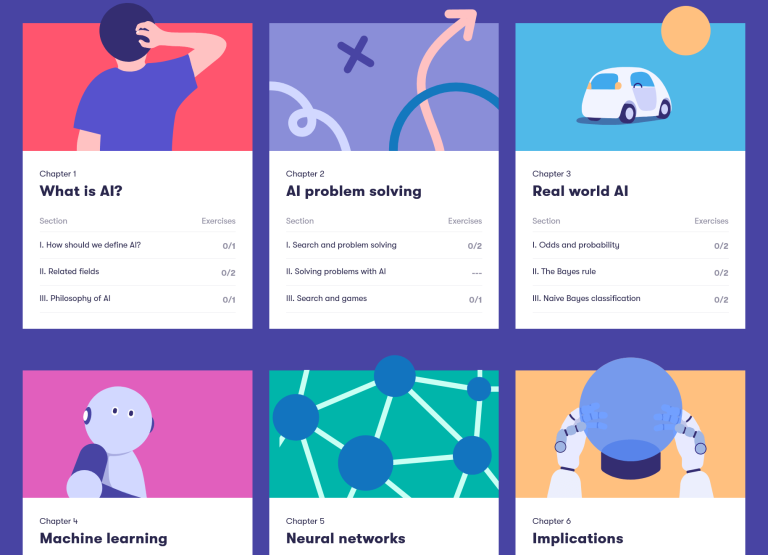

Subjects Covered:

Throughout this course, you will dive into various subjects related to NLP and Transformer models. The course is structured as follows:

Chapters 1 to 4

These chapters provide an introduction to the fundamental concepts of the Hugging Face Transformers library. By the end of this part, you will gain a comprehensive understanding of Transformer models, their inner workings, and how to utilize a model from the Hugging Face Hub. Additionally, you will learn how to fine-tune a model on a dataset and share your results on the Hub.

Chapters 5 to 8

In this section, you will learn the basics of Hugging Face Datasets and Hugging Face Tokenizers before delving into classic NLP tasks. By the end of these chapters, you will acquire the skills to independently tackle common NLP challenges.

Chapters 9 to 12

Expanding beyond NLP, these chapters explore how Transformer models can be applied to tasks in speech processing and computer vision. Along the way, you will learn how to build and share demos of your models, optimizing them for production environments. By the end of this part, you will be well-prepared to apply Hugging Face Transformers to a wide range of machine learning problems.

Course Features:

- Utilizes libraries from the Hugging Face ecosystem: Transformers, Datasets, Tokenizers, and Accelerate, along with the Hugging Face Hub.

- Hands-on experience with real-world datasets and tasks, ensuring practical learning.

- In-depth understanding of Transformer models and their applications in NLP, speech processing, and computer vision.

- Collaborative learning environment with access to forums for asking questions and discussing ideas.

- Code examples and exercises provided in Jupyter notebooks, available in Google Colab or Amazon SageMaker Studio Lab.

Key Learning Outcomes:

By the end of this course, you will have gained an understanding of the following subjects:

- Proficiency in using libraries from the Hugging Face ecosystem for NLP tasks.

- In-depth knowledge of Transformer models and their functioning.

- Ability to fine-tune models on custom datasets and share results on the Hugging Face Hub.

- Understanding of NLP fundamentals and the capacity to address common NLP challenges independently.

- Application of Transformer models in speech processing and computer vision tasks.

- Building and sharing demos of your models, optimized for production environments.

Target Audience:

This course is intended for individuals with the following background:

- A solid foundation in Python programming.

- Some familiarity with deep learning concepts is beneficial, but prior knowledge of PyTorch or TensorFlow is not required.

It is recommended that learners have completed an introductory deep learning course, such as fast.ai’s Practical Deep Learning for Coders or similar programs developed by DeepLearning.AI.

Accreditation:

Currently, the Hugging Face NLP Course does not offer formal certification upon completion. However, the team is actively working on a certification program for the Hugging Face ecosystem.

Links

Copyright © 2024 EasyWithAI.com

Thank You

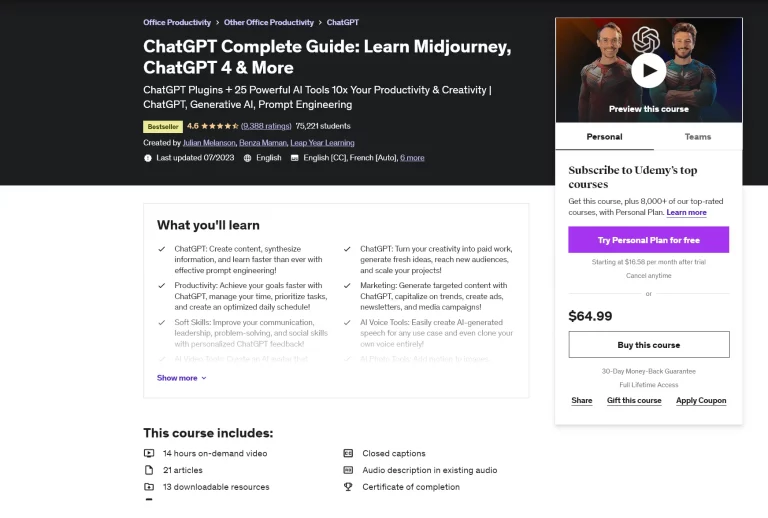

Readers like you help support Easy With AI. When you make a purchase using links on our site, we may earn an affiliate commission at no extra cost to you.