A new extension for Stable Diffusion called ControlNet has recently appeared on GitHub, and brings with it a revolutionary new way to use img2img with the popular AI image generator. ControlNet is a method that enables users to control the output of the Stable Diffusion model by specifying the areas of an input image to keep or discard. In this blog post, we will explore what ControlNet is and how it works.

What is ControlNet?

ControlNet is an extension to the Stable Diffusion model that gives users an extra layer of control when it comes to img2img processing. The main idea behind ControlNet is to use additional input conditions that tell the model exactly what to do. With ControlNet, users can control the output to further match an original source image, making it more versatile and applicable to many different use cases.

ControlNet is not a standalone model, but rather an extension that can be added to any Stable Diffusion model. ControlNet models come in two forms: blocked and trainable. The blocked form keeps the capabilities of the production-ready diffusion model, while the trainable variant can learn new conditions for picture synthesis by fine-tuning with tiny data sets.

How Does ControlNet Work?

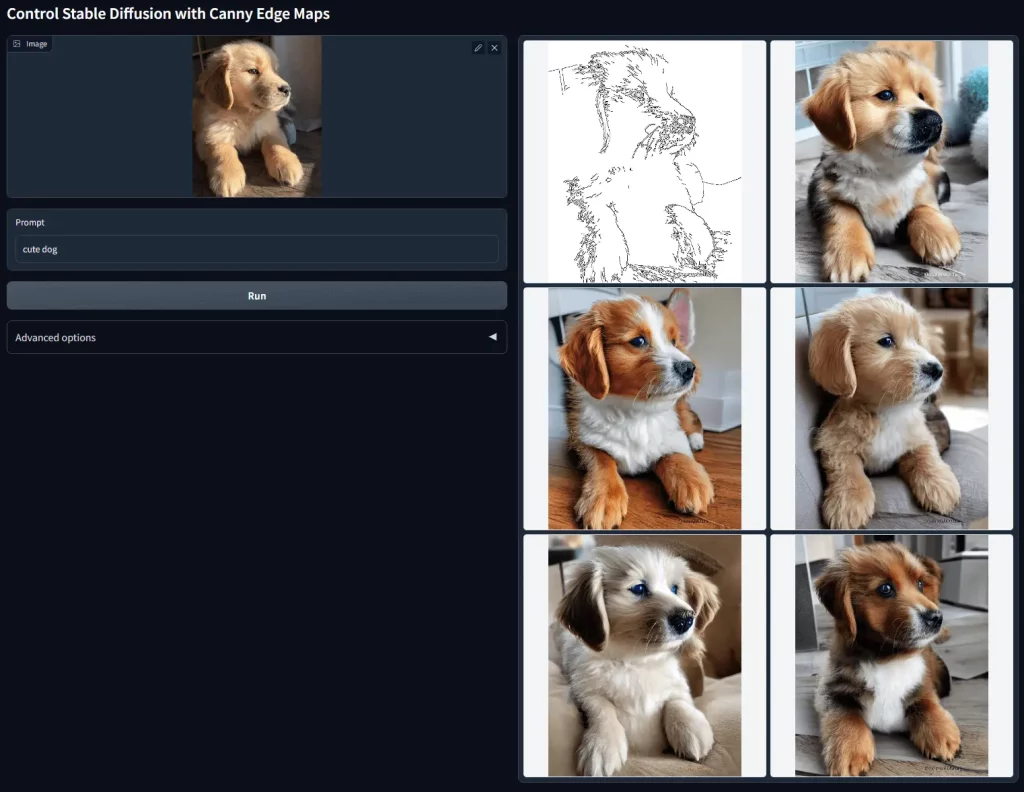

ControlNet works by introducing an additional input condition known as a depth mask to the Stable Diffusion model. This input condition specifies the areas of an input image to keep or discard. The input condition is represented by a binary mask that indicates which pixels should be kept and which pixels should be discarded. The binary mask can be generated in several ways, such as drawing a rough outline of the object of interest or by using a segmentation model to generate the mask automatically. There are already several different segmentation models available such as depth, HED, canny and open pose.

Once the binary mask is generated, it is combined with the input image to form a new input. The Stable Diffusion model then takes this new input and generates an output image that is conditioned on the input condition. The output image will retain the parts of the input image that correspond to the areas in the binary mask and discard the rest of the original image.

The control provided by the binary mask can be fine-tuned by changing the weight value of the mask, which determines how much of the input image should be kept. This weight can be used to adjust the level of detail in the output image. For example, using the AUTOMATIC1111 web interface, setting the ControlNet weight to 1.5 will make the generated image closely match the original, whereas a weight value of 0.5 and below will generate a more unique image whilst still using the original mask as a base.

Applications of ControlNet

ControlNet has several potential applications in the field of generative AI, such as image synthesis, image editing, and design and concept creations. Here are some of the ways ControlNet can be used:

Image Synthesis: ControlNet can be used to synthesize images with specific objects or features. For example, by providing a binary mask that indicates which parts of an input image correspond to an object of interest, ControlNet can generate images with that object while ignoring the rest of the input. This is similar to existing inpainting techniques, however, the added control of the binary mask can give much better results!

Image Editing: ControlNet can be used to edit images by specifying which parts of an image to keep or discard. This can be used to remove unwanted objects from an image or to retain specific features while discarding the rest. AI applications which focus on image background removal and product mock-up photos could find great utility in the extension thanks to this.

Design and Concepts: For those who might already have existing concept art or designs, ControlNet can be a great way to uncover endless variations and alternate art styles. Game developers might wish to use ControlNet to generate hundreds of video game avatars, for example.

Since the extension is still new, people are still discovering lots of exciting use cases and ways to use it. It gives much better results than the original img2img technique, and it can also be used through the text2img section. In fact, depending if you use ControlNet in text2img or img2img can make quite a big difference in the output results. Using the img2img tab will let you use ControlNet alongside the original method, which can allow you to copy or match an original image with better accuracy. New concepts and designs might be easier on the text2img tab.

You can find more about ControlNet over at the official GitHub repo here: https://github.com/lllyasviel/ControlNet

If you’re using AUTOMATIC1111, ControlNet is already available as an extension in the “Extensions” tab, you can simply add it to your interface and reload the UI.