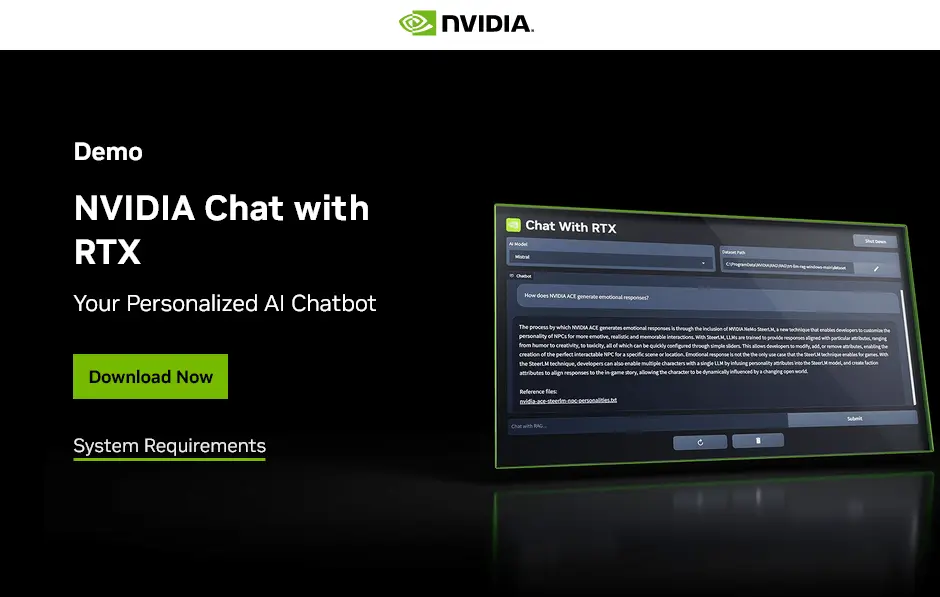

NVIDIA’s Chat with RTX is a personalized AI chatbot that lets you build custom large language models (LLMs) connected to your own content like documents, notes, and videos. It’s built from the ‘TensorRT-LLM RAG‘ project and powerful AI tensor cores found on NVIDIA GPUs allow it to quickly generate contextually relevant answers to your questions.

Chat with RTX is designed to run locally on Windows PCs with GeForce or Quadro RTX graphics cards, and therefore will require an NVIDIA GPU with Tensor cores. The official system requirements suggest at least a RTX 30 or 40 Series GPU. It supports various file types like text, PDFs, Word docs, and YouTube video transcripts. You can easily point it to folders or playlists to index the content, making it easy to build a custom models in seconds.